Conversational AI is all about making machines communicate in as much human as it can with the understanding of natural language. They can be chatbots, voice bots, virtual assistants, and more. In reality, they may be slightly different from each other based on the language and data they trained on. However, one key feature that ties them all together is their ability to understand natural language and respond to it.

GPT-3 (Generative Pre-trained Transformer 3) is a language model made by OpenAI a research lab that’s funded by big tech companies like Microsoft and Google. It was released to the public through an API in July 2020. It’s based on a famous deep learning architecture called transformers published in 2015 and It's generative because unlike other neural networks that spit out a numeric score or a yes or no answer, it can generate long sequences of the original text as its output.

GPT-3 can do a lot of stuff like question answering, summarizing articles, information retrieval, it can also provide you with code snippets! What makes it unique It’s the size and the development it went through which made it the closest model to human performance.

How to make your GPT

To create our GPT language model we need to find out what do we need and how to do it. As we know, the main building blocks for deep learning are the dataset and computing power. So, let’s see some examples of that.

Starting with the dataset used to train GPT-3. OpenAI Has collected the data from the internet which generated about 499 billion tokens(word) comparing to GPT-2 which trained with 10 billion tokens which were about 40GB. That makes GPT-3 trained with a total of 2TB of data. Here is the breakdown of the data:

| DataSet | # Tokens (Billions) |

| Total | 499 |

| Common Crawl (filtered by quality) | 410 |

| Web Text2 | 19 |

| Books 1 | 12 |

| Books 2 | 55 |

| Wikipedia | 3 |

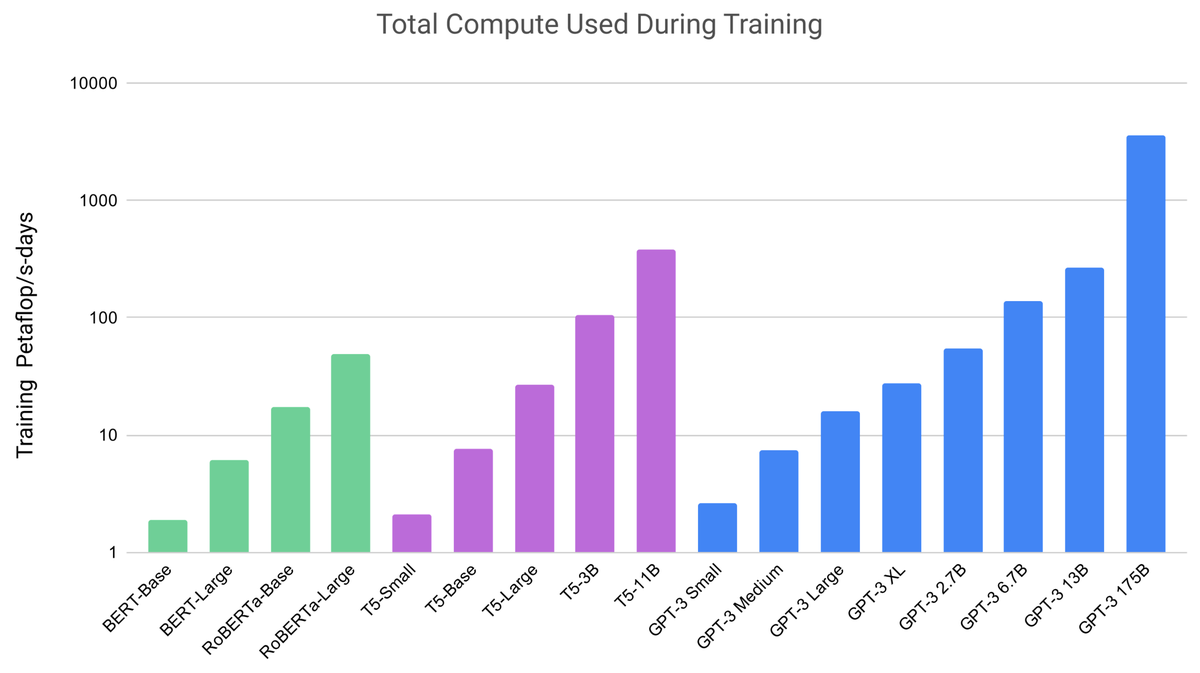

Continuing with computing power, OpenAI found that to do well on their increasingly large datasets, they had to add more and more weights. The original Transformer from Google (BERT) had 110 million weights. GPT-1 followed this design. With GPT-2 It went up to 1.5 billion weights. With the latest GPT-3, the number of parameters has reached 175 billion, making GPT-3 the biggest neural network in the world.

Having a model with 175 billion weights is not a simple operation of increasing parameters but it becomes an incredible exercise in parallel computer processing. You can see how much it compares to others from figure 1.

Figure 1. GPT-3 training chart compared to others

Source from here

It hasn't described the exact computer configuration used for training, but others mentioned it was on a cluster of Nvidia V100 chips running in Microsoft Azure. The company described the total compute cycles required, stating that it is the equivalent of running one thousand trillion floating-point operations per second per day for 3,640 days. To make it simpler to imagine it. It would take about 3 years for the V100(highest GPU available) with an estimated cost of 4.6$ million for a single training run.

That’s not the only problem for the computing power but also the capacity to carry a 175 billion weight parameter each parameter is a float number of sizes 32bit which requires in total about 700GB of GPU Ram. 10 times more than the memory on a single GPU.

If you still up for the challenge and want to experiment with a GPT model. You can try GPT-Neo from here. it’s an implementation of model & data-parallel GPT2 & GPT3-like models, with the ability to scale up to full GPT3 sizes (and possibly more!), using the mesh-TensorFlow library.

But is it really worth it? We will discuss next the limitation of it.

Limitations of GPT-3

So what can GPT-3 do? Well, for one thing, it can answer questions on any topic while retaining the context of previous questions asked. We can see in figure 2 examples of questions GPT-3 got right.

Figure 2. GPT-3 answering correctly

Source from here

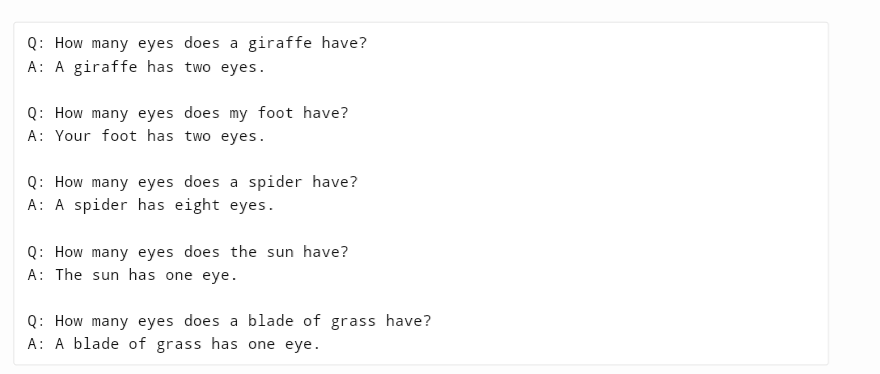

So, when does the GPT-3 model fails?

GPT-3 knows how to have a normal conversation. but It doesn’t quite know how to say “Wait a moment your question is nonsense.” It also doesn’t know how to say “I don’t know.” in figure 3

Figure 3. GPT-3 answering nonsense questions

Source from here

People are used to computers being super smart at logical activities, like playing chess or adding numbers. It might come as a surprise that GPT-3 is not perfect at simple math the questions from figure 4.

Figure 4. GPT-3 confused from simple math

Source from here

This problem shows up in more human questions as well, if you ask it about the result of a sequence of operations. You can see that in figure 5

Figure 5. GPT-3 fails on sequence of operations.

Source from here

It’s like GPT-3 has limited short-term memory, and has trouble reasoning about more than one or two objects in a sentence.

The biggest problem for GPT-3 is being a black box like most neural networks, it’s so captivating because it can answer such a vast array of questions right, but it also gets quite a bit of them wrong, as we previously saw. The problem is when GPT-3 falls short, there is no way of debugging it or pinpoint the source of the error. Any customer-facing interface which cannot be iterated and revised is unsustainable or scalable in a business environment.

This is another aspect in which the current conversational AI solutions are better to GPT-3. Even simple chatbots allow their users to alter and improve their conversational flows as needed. In the case of more sophisticated conversational AI interfaces, users not only have a clear snapshot of the error but can track down, diagnose, and remedy the issue instantly.

Conclusion

GPT-3 is quite impressive in some areas, and still clearly subhuman in others but it’s far from ready to be used on real products, and as for businesses looking to provide their audiences with engaging, timely, and helpful conversational experiences will continue to rely on existing conversational AI solutions.

I still hope that with a better understanding of its strengths and weaknesses, we data scientists will be better equipped to use modern language models like GPT-3 in a business environment with real products.

Resources:

- https://en.wikipedia.org/wiki/GPT-3

- https://lambdalabs.com/blog/demystifying-gpt-3/

- https://www.zdnet.com/article/what-is-gpt-3-everything-business-needs-to-know-about-openais-breakthrough-ai-language-program/

- https://arxiv.org/abs/2005.14165

- https://lacker.io/ai/2020/07/06/giving-gpt-3-a-turing-test.html